9.2 – Streaming Stored Video

- Streaming video systems can be classified into 3 categories:

- UDP streaming

- HTTP streaming

- Adaptive HTTP streaming

- The majority of today’s systems employ HTTP streaming and adaptive HTTP streaming.

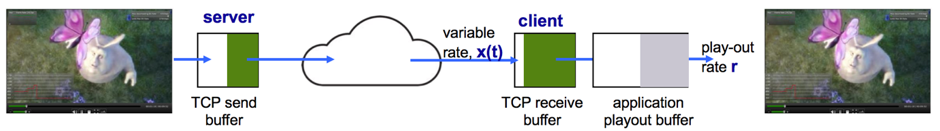

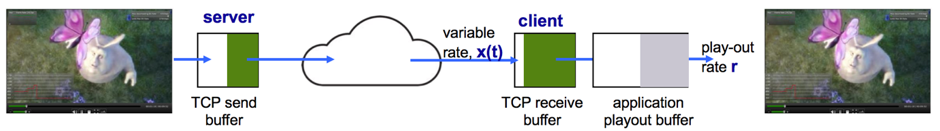

- All 3 forms of video streaming use client-side application buffering to mitigate the effects of varying end-to-end delays and carrying amounts of available bandwidth between server and client.

- Users can generally tolerate a small several second initial delay between when the client requests a video and when video playout begins at the client.

- When the video starts to arrive at the client, the client need not immediately being playout, but can instead build up a reserve of video in an application buffer.

- Once the client has built up a reserve of several seconds of buffered-but-not-yet-played video, the client can then begin video playout.

- There are 2 important advantages provided by client buffering:

- Client-side buffering can absorb variations in server-to-client delay.

- If the server-to-client bandwidth briefly drops below the video consumption rate, a user can continue to enjoy continuous playback, as long as the client application buffer does not become completely drained.

- Example of client-side buffering:

- Suppose that video is encoded at a fixed bit rate, and thus each video block contains video frames that are to be played out over the same fixed amount of time, $$\Delta$$.

- The server transmits the first video block at $$t_0$$, the second block at $$t_0+\Delta$$, the third block at $$t_0+2\Delta$$, and so on.

- Once the client begins playout, each block should be played out $$\Delta$$ time units after the previous block in order to reproduce the timing of the original recorded video.

- Because of the variable end-to-end network delays, different video blocks experience different delays.

- The first video block arrives at the client at time $$t_1$$ and the second block arrives at $$t_2$$.

- The network delay for the $$i^{th}$$ block is the horizontal distance between the time the block was transmitted by the server and the time it is received at the client; note that the network delay varies from one video block to another.

- In this example, if the client were to being playout as soon as the first block arrived at $$t_1$$, then the second block would not have arrived in time to be played out at out at $$t_1+\Delta$$. In this case, video playout would either have to be stalled or block 2 could be skipped, both resulting in undesirable playout impairments.

- Instead if the client were to delay the start of playout until $$t_3$$, when blocks 1 through 6 have all arrived, periodic playout can proceed with all blocks having been received before their playout time.

9.2.1 – UDP Streaming

- The server transmits video at a rate that matches the client’s video consumption rate by clocking out the video chunks over UDP at a steady rate.

- F.ex. If the videos consumption rate is 2 Mbps and each UDP packet carries 8000 bits of video, then the server would transmit one UDP packet into its socket every 8000/2 = 4 msec.

- UDP streaming typically uses a small client-side buffer, big enough to hold less than a second of video.

- Before passing the video chunks to UDP, the server will encapsulate the video chunks within transport packets specially designed for transporting audio and video, using the Real-Time Transport Protocol (RTP) or a similar scheme.

- In addition to server-to-client video stream, the client and server also maintain, in parallel, a separate control connection over which the client sends commands regarding session state changes (pause, resume etc.).

- UDP streaming has 3 significant drawbacks:

- Due to the unpredictable and varying amount of available bandwidth between server and client, constant-rate UDP streaming can fail to provide continuous playout.

- It requires a media control server, such as an RTSP server, to process client-to-server interactivity requests and to track client state for each ongoing client session.

- This increases the overall cost and complexity of deploying a large-scale video-on-demand system.

- Many firewalls are configured to block UDP traffic, preventing the users behind these firewalls from receiving UDP video.

9.2.2 – HTTP Streaming

- The video is simply stored in an HTTP server as an ordinary file with a specific URL.

- When a user wants to see the video, the client establish a TCP connection and issues an HTTP GET request for that URL for which then the server sends the video file, within an HTTP response message.

- On the client side, the bytes are collected in a client application buffer. Once the number of bytes in this buffer exceeds a predetermined threshold, the client application beings playback.

- The use of HTTP over TCP allows the video to traverse firewalls and NATs more easily.

- streaming over HTTP also obviates the need for a media control server, such as an RTSP server, reducing the cost of a large-scale deployment over the Internet.

- For streaming stored videos, the client can attempt to download the video at a rate higher than the consumption rate, thereby prefetching video frames that are to be consumed in the future.

- This prefetched video is stored in the client application buffer which happens naturally with TCP streaming since TCP’s congestion avoidance mechanism will attempt to use all the available bandwidth between server and client.

- During the pause period, bits are not removed form the client application buffer, even though bits continue to enter the buffer from the server. If the client application buffer is finite, it may eventually become full, which will cause “back pressure” all the way back to the server.

- Specifically, once the client application buffer becomes full, bytes can no longer be removed from the client TCP receive buffer, so it too becomes full.

- Once the client receives TCP buffer becomes full, bytes can no longer be removed from the server TCP send buffer, so it also becomes full.

- Once the TCP becomes full, the server cannot send any more bytes into the socket.

- When the client application removes f bits, it creates room for f bits in the client application buffer, which in turn allows the server to send f additional bits. Thus, the server send rate can be no higher than the video consumption rate at the client.

- A full client application buffer indirectly imposes a limit on the rate that video can be sent from server to client when streaming over HTTP.

- A simple model of the initial playout delay and freezing due to application buffer depletion:

- Let B denote the size (in bits) of the client’s application buffer, and let Q denote the number of bits that must be buffered before the client application begins playout.

- Let r denote the video consumption rate (the rate at which the client draws bits out of the client application buffer during playback. F.ex. if the video’s frame rate is 30 frames/sec, and each (compressed) frame is 100000 bits, then r=3 Mbps)

- Let us ignore TCP’s send and receive buffers.

- Let’s assume that the server sends bits at a constant rate x whenever the client buffer is not full.

- Suppose that at time $$t=0$$, the application buffer is empty and video begins arriving to the client application buffer. We now ask at what time $$t=t_p$$ does playout begin? And while we are at it, at what time $$t=t_f$$ does the client application buffer become full?

- First let’s determine $$t_p$$, the time when Q bits have entered the application buffer and playout beings.

- Recall that bits arrive to the client application buffer at rate x and no bits are removed from this buffer before playout beings.

- The amount of time required to build up Q bits is $$t_p=\frac{Q}{x}$$

- Second let’s determine $$t_f$$, the point in time when the client application buffer becomes full.

- Observe that if $$x<r$$, then the client buffer will never become full.

- Starting at time $$t_p$$, the buffer will be depleted at rate r and will only be filled at a rate $$x<r$$.

- Eventually the client buffer will empty out entirely, at which time the video will freeze on the screen while the client buffer awaits another $$t_p$$ seconds to build up Q bits of video.

- Thus, when the available rate in the network is less than the video rate, playout will alternate between periods of continuous playout and periods of freezing.

- When $$x>r$$ starting at the time $$t_p$$ the buffer increases from Q to B at rate x-r since bits are being depleted at a rate r but are arriving at rate x.

- HTTP streaming systems often make use of the HTTP byte-range header in the HTTP GET request message, which specifies the specific range of bytes the client currently wants to retrieve from the desired video.

- Useful when the user wants to reposition to a future point in time in the video.

- When the user repositions to a new position, the client sends a new HTTP request, indicating with the byte-range header from which byte in the file should the server send data.

- When the server receives the new HTTP request, it can forget about any earlier request and instead send bytes beginning with the byte indicating in the byte-range request.

- When a user repositions to a future point in the video or terminates the vide early, some prefetched-but-not- yet-viewed data transmitted by the server will go unwatched, which is a waste of network bandwidth and server resources.

- For this reason, many streaming systems use only a moderate-size client application buffer, or will limit the amount of prefetched video using the byte-range header in HTTP requests.

- A third type of streaming is Dynamic Adaptive Streaming over HTTP (DASH), which uses multiple versions of the video, each compressed at a different rate.