4.2 – What’s Inside a Router?

- Four router components can be identified:

- Input ports:

- It performs the physical layer function of terminating an incoming physical link at a router; this is shown in the leftmost box of an input port and the rightmost box of an output port in the picture.

- An input port also performs link-layer functions needed to interoperate with the link layer at the other side of the incoming link; this is represented by the middle boxes in the input and output ports.

- A lookup function is also performed at the input port; this will occur in the rightmost box of the input port. It is here that the forwarding table is consulted to determine the router output port to which an arriving packet will be forwarded via the switching fabric.

- Control packets (f.ex. packets carrying routing protocol information) are forwarded from an input port to the routing processor.

- Switching fabric:

- Connects the router’s input ports to its output ports. This switching fabric is completely contained within the router, a network inside of a network router.

- Output ports:

- Stores packets received from the switching fabric and transmits these packets on the outgoing link by performing the necessary link-layer and physical-layer functions. When a link is bidirectional, and output port will typically be paired with the input port for that link on the same line card.

- Routing processor:

- The routing processor performs control-plane functions.

- In traditional routers, it executes the routing protocols, maintains routing tables and attached link state information, and computes the forwarding table for the router.

- In SDN routers, the routing processor is responsible for communicating with the remote controller in order to receive forwarding table entries computed by the remote controller, and install these entries in the router’s input ports.

- The routing processor also performs the network management functions.

- A router’s input ports, output ports, and switching fabric are almost always implemented in hardware (Because the datagram-processing pipeline must operate far too fast than what software can handle (down to a few nanoseconds)).

- Forwarding hardware can be implemented either using a router vendor’s own hardware design, or constructed using purchased merchant-silicon chips.

- A router’s control functions – executing the routing protocols, responding to attached links that go up or down, communicating with the remote controller and performing management functions – operate at milliseconds pr seconds. These control plane functions are thus usually implemented in software and executed on the routing processor.

- Let’s suppose that the interchange is a roundabout, and that as a car enters the roundabout, a bit of processing is required.

- Let’s consider what information is required for this processing:

- Destination-based forwarding:

- Suppose the car stops at an entry station and indicates its final destination. An attendant at the entry station looks up the final destination, determines the roundabout exit that leads to that final destination and tells the driver which roundabout exit to take.

- Generalized forwarding:

- The attendant could also determine the car’s exit ramp on the basis of many other factors besides the destination (license number, state, model, make and year etc.)

- The generalized forwarding, any number of factors may contribute to the attendant’s choice of the exit ramp for the given car.

- Once the car enters the roundabout, it eventually leaves at the prescribed roundabout exit ramp, where it may encounter other cars leaving the roundabout at that exit.

- The analogy above is similar to our network where the entry roads and entry station correspond to the input port; the roundabout corresponds to the switch fabric; and the roundabout exit road corresponds to the output port.

- The forwarding table is copied from the routing processor to the line cards over a separate bus.

- With such a shadow copy at each line card, forwarding decisions can be made locally, at each input port, without invoking the centralized routing processor on a per-packet basis and thus avoiding a centralized processing bottleneck.

- An example of how to handle the forwarding table switching. Lets suppose that our router has four links, numbered 0 through 3, and that packets are to be forwarded to the link interface as follows:

- Destination address range Link interface

- 11001000 00010111 00010000 00000000 0

- 11001000 00010111 00010111 11111111 0

- 11001000 00010111 00011000 00000000 1

- 11001000 00010111 00011000 11111111 1

- 11001000 00010111 00011001 00000000 2

- 11001000 00010111 00011111 11111111 2

- Otherwise 3

- This can be written with 4 entries in the forwarding table:

- Prefix Link interface

- 11001000 00010111 00010 0

- 11001000 00010111 00011000 1

- 11001000 00010111 00011 2

- Otherwise 3

- With this style of forwarding table as in the example, the router matches a prefix of the packet’s destination address with the entries in the table; if there’s a match, the router forwards the packet to a link associated with the match.

- When there are multiple matches, the router uses the longest prefix matching rule;

- It finds the longest matching entry in the table and forwards the packet to the link interface associated with the longest prefix match.

- At Gigabit transmission rates, this lookup must be performed in nanoseconds. Thus, not only must lookup be performed in hardware, but techniques beyond a simple linear search through a large table are needed. Special attention must also be paid to memory access times, resulting in design with embedded on-chip DRAM and faster SRAM memories.

- Ternary Content Addressable Memories (TCAM) are often used for lookup.

- A 32-bit IP address is presented to the memory, which returns the content of the forwarding table entry for that address in essentially constant time.

- The Cisco Catalyst 6500 and 7600 can hold upwards of a million TCAM forwarding table entries.

- Once a packet’s output port has been determined via the lookup, the packet can be sent into the switching fabric.

- In some designs, a packet may be temporarily blocked from entering the switching fabric if packets from other input ports are currently using the fabric.

- A blocked packet will be queued at the input port and then scheduled to cross the fabric at a later point in time.

- Many other actions also needs to be taken when a packet arrives:

- Physical- and link-layer processing must occur

- The packet’s version number, checksum and time-to-live must be checked and the latter two fields rewritten.

- Counters used for network management must be updated

- The input port steps of looking up a destination IP address (“match”) and then sending the packet into the switching fabric to the specified output port (“action”) is specific case of a more general “match plus action” abstractations that is performed in many networked devices, not just routers.

- In link-layer switches, link-layer destination addresses are looked up and several actions may be taken in addition to sending the frame into the switching fabric towards the output port.

- In firewalls an incoming packet whose header matches a given criteria may be dropped.

- In a network address translator, an incoming packet whose transport-layer port number matches a given value will have its port number rewritten before forwarding.

4.2.2 – Switching

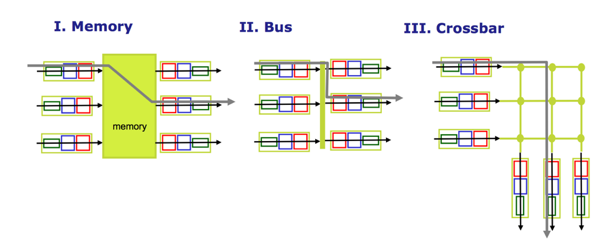

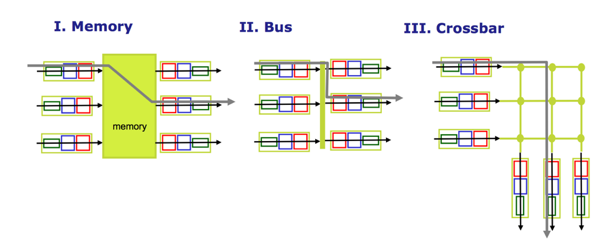

- Switching can be accomplished in a number of ways:

- Switching via memory:

- The earliest routers were normal computers where the CPU controlled the switching between input and output ports where they functioned as traditional I/O devices in a traditional OS.

- An input port with an arriving packet first signaled the routing processor via an interrupt. The packet was then copied from the input port into processor memory.

- The routing processor extracted the destination address form the header, looked up the appropriate output port in the forwarding table, and copied the packet to the output port’s buffer.

- If the memory bandwidth is such that the maximum of B packets per second can be written into, or read from, memory, then the overall forwarding throughput must be less than B/2

- Two packets cannot be forwarded at the same time even if they have different destination ports.

- Some modern routers switch via memory, but the lookup and storing into the appropriate memory location is performed by processing on the input line card (which make them look like shared memory multiprocessors).

- Switching via Bus:

- An input port transfers a packet directly to the output port over a shared bus, without intervention by the routing processor.

- This is typically done by having the input port pre-pend a switch-internal label to the packet indicating the local output port to which this packet is being transferred and transmitting the packet onto the bus.

- All output ports receive the packet, but only the port that matches the label will keep the packet. The label is then removed at the output port, as this label is only used within the switch to cross the bus.

- If multiple packets arrive at the router at the same time, each at a different input port, all but one must wait since only one packet can cross the bus at a time.

- Because every packet must cross the single bus, the switching speed of the router is limited to the bus speed.

- Cisco 6500 router internally switches packets over 32-Gbps-backlane bus.

- Switching via an interconnection network

- Use a more sophisticated interconnection network, such as those that have been sued in the past o interconnect processors in a multiprocessor computer architecture.

- A crossbar switch is an interconnection network consisting of 2N buses that connect N input ports to N output ports. Each vertical bus intersects each horizontal bus at a crosspoint, which can be opened or closed at any time by the switch fabric controller.

- When a packet arrives from port A and needs to be forwarded to port Y, the switch controller closes the crosspoint at the intersection of busses A and Y, and port A then sends the packet onto its bus, which is picked up by the bus Y.

- A packet can go from port B to port X at the same time as its different input and output ports.

- If a packet from port A and Port B are going to port Z at the same time, then they have to queue.

- A crossbar switch is non-blocking.

- Cisco 12000 series use crossbar while Cisco 7600 series can either use bus or crossbar.

- More sophisticated interconnection networks use multiple stages of switching elements to allow packets from different input ports to proceed towards the same output port at the same time through the multi-stage switching fabric.

- Cisco CRS employs a three-stage non-blocking switching strategy.

- A router’s switching capacity can also be scaled by running multiple switching fabrics in parallel. Input ports and output ports are connected to N switching fabrics that operate in parallel. An input port breaks a packet into K smaller chunks, and sends the chunks through K of these N switching fabrics to the selected output port, which reassembles the K chunks back into the original packet.

4.2.3 – Output Port Processing

- Output port processing takes packets that have been stored in the output port’s memory and transmits them over the output link. This includes selecting and de-queueing packets for transmission, and performing the needed link-layer and physical-layer transmission functions.

4.2.4 – Where Does Queueing Occur?

- The location and extent of the traffic load, the relative speed of the switching fabric, and the line speed.

- Because queues can grow large, the router’s memory can eventually be exhausted and packet loss will occur when no memory is available to store arriving packets. This is where packets are dropped and lost.

- Suppose that the input and output line speeds all have an identical transmission rate of $$R_{line}$$ packets per second, and that there are N input ports and N output ports.

- Let’s assume that all packets have the same fixed length, and that packets arrive to input ports in a synchronous manner (the time to send a packet on any link is equal to the time to receive a packet on any link, and during such an interval of time, either zero or one packet can arrive on an input link)

- Define the switching fabric transfer rate $$R_{switch}$$ as the rate at which packet can be moved from input port to output port

- If $$R{switch}$$ is N times faster than $$R{line}$$, then only negligible queueing will occur at the input ports. This is because even in the worst case, where all N input lines are receiving packets, and all packets are to be forwarded to the same output port, each batch of N packets can be cleared through the switch fabric before the next batch arrives.

- If the switch fabric is not fast enough then packet queueing can also occur at the input ports, as packet must join input port queues to wait their turn to be transferred through the switching fabric to the output port.

- Consider a crossbar switching fabric where all link speeds are identical, that one packet can be transferred from any one input port to a given output port in the same amount of time it takes for a packet to be received on an input link, and packets are moved from a given input queue to their desired output queue in an FCFS manner.

- Multiple packets can be transferred in parallel, as long as their output ports are different. However, if two packets at the front of two input queues are destined for the same output queue, then one of the packets will be blocked and must wait at the input queue.

- Head-of-line (HOL) Blocking in an input-queue switch:

- A queued packet in an input queue must wait for transfer through the fabric (even though its output port is free) because it is blocked by another packet at the head of line.

- The input queue will grow to unbounded length under certain assumptions as soon as the packet arrival rate on the input link reaches only 58% of their capacity.

- Suppose that $$R{switch}$$ is again N times faster than $$R{line}$$ and that packets arriving at each of the N input ports are destined to the same output port. In this case, in the time it takes to send a single packet onto the outgoing link, N new packets will arrive at this output port.

- Since the output port can transmit only a single packet in a unit of time, the N arriving packets will have to queue for transmission over the outgoing link. Then N more packets can possibly arrive in the time it takes to transmit just one of the N packets that had just previously been queued.

- Thus, packets queues can form at the output ports even when the switching fabric is N times faster than the port line speeds. Which will lead to exhaust of the available memory at the output port.

- When the buffer is full it can either drop the arriving packets (drop-tail) or remove one or more already-queued packets to make room for the newly arrived packet, this can be useful to provide a congestion signal to the sender.

- A number of proactive packet-dropping and marking policies (known as Active queue management (AQM)) has been proposed and analyzed where the most popular is Random Early Detection (RED)

- When there’s multiple packets in a queue, the packet scheduler at the output port must choose one packet among those queued, for transmission.

- How much buffering Is required?

- Earlier beliefs:

- The buffer size was the amount equal to an average round-trip time times the link capacity.

- A 10 Gbps link with an RTT of 250 msec would need an amount of buffering equal to $$buffer = RTT \cdot capacity = 2.5$$ Gbits of buffers.

- Newer research:

- When there are a large number of TCP flows (N) passing through a link, the amount of buffering needed is $$Buffer = \frac{RTT\cdot capacity}{\sqrt{N}}$$ with a large number of flows typically passing through large backbone router links, the value of N can be large, with the decrease in needed buffer size becoming quite significant.

4.2.5 – Packet Scheduling

- There’s 3 different way to queue packets:

- First-in-first-out (FIFO)

- Priority Queuing

- Round Robin and Weighted fair Queueing (WFQ)

- First-in-first-out (FIFO):

- Packets arriving at the link output queue wait for transmission if the link is currently busy transmitting another packet. If there is not sufficient buffering space to hold the arriving packet, the queue’s packet-discarding policy then determines whether the packet will be dropped (lost) or whether other packets will be removed from the queue to make space for arriving packets.

- FIFO selects packets in the order they arrived at the output link queue.

- Priority Queuing:

- Packets arriving at the output link are classified into priority classes upon arrival at the queue.

- In practice, a network operator may configure a queue so that packets carrying network management information receive priority over user traffic; additionally, real-time voice-over-IP packets might receive priority over non-real traffic such as SMTP or IMAP e-mail packets.

- Priority class typically has its own queue. When choosing a packet to transmit, the priority queueing discipline will transmit a packet from the highest priority class that has a nonempty queue.

- Under a non-preemptive priority queuing discipline, the transmission of a packet is not interrupted once it has begun.

- Round Robin and Weighted fair Queueing (WFQ):

- Packets are sorted into classes as with priority queueing. However, rather than there being a strict service priority among classes, a round robing scheduler alternates service among the classes.

- A so-called work-conserving queueing discipline will never allow the link to remain idle whenever there are packets queued for transmission. A work-conserving round robing discipline that looks for a packet of a given class but find none will immediately check the next class in the round roving sequence.

- A generalized form of round robing queueing is weighted fair queuing (WFQ) discipline.

- WFQ differs from round robin in that each class my receive a differential amount of service in any interval of time. Specifically, each class, i, is assigned a weight, $$w_i$$, under WFQ, during any interval of time during which there are class I packets to send, class i will then be guaranteed to receive a faction of service equal to $$\frac{w_i}{\Sigma w_j}$$ where the sum in the denominator is taken over all classes that also have packets queued packets, class i will still be guaranteed to receive a fraction $$\frac{w_i}{\Sigma w_j}$$ of the bandwidth, where in this worst case the sum in the denominator is over all classes. Thus, for a link with transmission rate R, class I will always achieve a throughput of at least $$\frac{R\cdot w_i}{w_j}$$.

- Packets are discrete and a packet’s transmission will not be interrupted to begin transmission of another pack.